Operation and Grand Central Dispatch interview questions

Operation and Grand Central Dispatch interview questions

Multithreading iOS interview questions, gcd, operation, operation queue

In this article, i am going to cover questions and concept related to GCD , multi-threading and operation. Lets start..

GCD

Its operating at the system level, can better accommodate the needs of all running applications, matching them to the available system resources in a balanced fashion.

Managing Dispatch Queues

GCD provides and manages FIFO queues to which your application can submit tasks in the form of block objects. Work submitted to dispatch queues are executed on a pool of threads fully managed by the system. No guarantee is made as to the thread on which a task executes.

Synchronous and Asynchronous Execution

Each work item can be executed either synchronously or asynchronously. When a work item is executed synchronously with the sync method, the program waits until execution finishes before the method call returns. When a work item is executed asynchronously with the async method, the method call returns immediately.

Serial and Concurrent Queues

A dispatch queue can be either serial, so that work items are executed one at a time, or it can be concurrent, so that work items are dequeued in order, but run all at once and can finish in any order. Both serial and concurrent queues process work items in first in, first-out (FIFO) order.

DispatchTypes:

DispatchWorkItem

DispatchWorkItem

encapsulates work that can be performed. A work item can be

dispatched onto a

DispatchQueue

and within a

DispatchGroup.

A

DispatchWorkItem

can also be set as a

DispatchSource

event, registration, or cancel handler.

DispatchSemaphore

DispatchSemaphore

provides an efficient implementation of a traditional counting

semaphore, which can be used to control access to a resource

across multiple execution contexts.

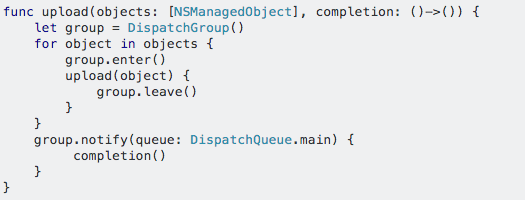

DispatchGroup

DispatchGroup

allows for aggregate synchronization of work. You can use them

to submit multiple different work items and track when they all

complete, even though they might run on different queues. This

behavior can be helpful when progress can’t be made until all of

the specified tasks are complete.

DispatchData

DispatchData

objects manage a memory-based data buffer. The data buffer is

exposed as a contiguous block of memory, but internally, it may

be comprised of multiple, discontiguous blocks of memory.

DispatchTime

DispatchTime

represents a point in time relative to the default clock with

nanosecond precision. On Apple platforms, the default clock is

based on the Mach absolute time unit.

DispatchSource

DispatchSource

provides an interface for monitoring low-level system objects

such as Mach ports, Unix descriptors, Unix signals, and VFS

nodes for activity and submitting event handlers to dispatch

queues for asynchronous processing when such activity occurs.

DispatchIO

DispatchIO

provides a channel to perform operations on file descriptor

using either stream-based and random-access semantics for

accessing the contents of a file descriptor.

DispatchObject

DispatchObject

is the base class for many dispatch types, including

DispatchQueue,

DispatchGroup, and

DispatchSource.

Benefits of

Operation

1. Operation Dependencies

Dependencies are a convenient way to execute operations in a

specific order. You can add and remove dependencies for an

operation using the

addDependency(_:)

and

removeDependency(_:)

methods. By default, an operation object that has dependencies

is not considered ready until all of its dependent operation

objects have finished executing. Once the last dependent

operation finishes, however, the operation object becomes ready

and able to execute.

The dependencies supported by

Operation make

no distinction about whether a dependent operation finished

successfully or unsuccessfully. (In other words, canceling an

operation similarly marks it as finished.) It is up to you to

determine whether an operation with dependencies should proceed

in cases where its dependent operations were cancelled or did

not complete their task successfully. This may require you to

incorporate some additional error tracking capabilities into

your operation objects.

2. KVO-Compliant Properties

The

NSOperation

class is key-value coding (KVC) and key-value observing (KVO)

compliant for several of its properties. As needed, you can

observe these properties to control other parts of your

application. To observe the properties, use the following key

paths:

-

isCancelled- read-only -

isAsynchronous- read-only -

isExecuting- read-only -

isFinished- read-only -

isReady- read-only -

dependencies- read-only -

queuePriority- readable and writable -

completionBlock- readable and writable

Although you can attach observers to these properties, you should not use Cocoa bindings to bind them to elements of your application’s user interface. Code associated with your user interface typically must execute only in your application’s main thread. Because an operation may execute in any thread, KVO notifications associated with that operation may similarly occur in any thread.

3. Maintaining Operation Object States

Operations can be paused, resumed, and cancelled. Once you dispatch a task using Grand Central Dispatch, you no longer have control or insight into the execution of that task.

When to use Operation or Grand Central Dispatch?

If you need to perform several tasks in a specific order, then operations are a good solution i.e. if you are going to utilize benefits of operation then only go for it.

Grand Central Dispatch is ideal if you just need to dispatch a block of code to a serial or concurrent queue, other benefit is that you can keep related code together.

Question’s Time:

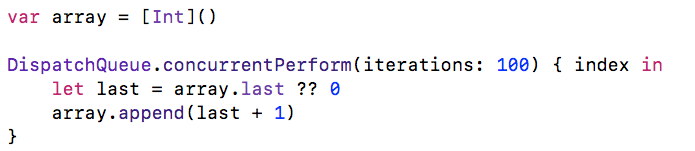

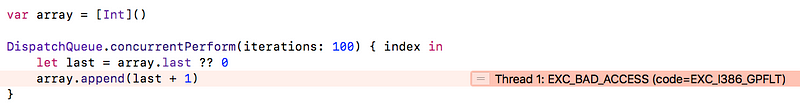

1. Write a code that shows thread-safety issues?

This problem is called a race condition and can always happen if multiple threads access a shared resource without making sure that one thread is finished operating on a resource before another one begins accessing it.

2. When should we suspect a race condition?

When variables that have multiple threads operating on them and you’re getting unexpected results some of the time.

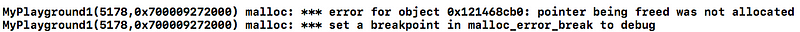

3. How do you handle race condition in iOS?

In order to prevent this, multiple threads need to access shared resources in a mutually exclusive way.

4. What are the Challenges of Concurrent Programming?

- Sharing of resources or race condition

- Mutual exclusion

- Deadlocks

- Starvation

- Priority inversion

5. How can you achieve mutual exclusion in iOS ?

There are several ways to achieve this kind of lock. But I think dispatch semaphore is better than others because it seems to have the least overhead. As found in Apples doc, “Replacing Semaphore Code”, it doesn’t go down to kernel space unless the semaphore is already locked (= zero), which is the only case when the code goes down into the kernel to switch the thread. I think that semaphore is not zero most of the time (which is of course app specific matter, though). Thus, we can avoid lots of overhead.

One more comment on dispatch semaphore, which is the opposite scenario to above. If your threads have different execution priorities, and the higher priority threads have to lock the semaphore for a long time, dispatch semaphore may not be the solution. This is because there’s no “queue” among waiting threads. What happens at this case is that higher priority threads get and lock the semaphore most of the time, and lower priority threads can lock the semaphore only occasionally, thus, mostly just waiting. If this behavior is not good for your application, you have to consider dispatch queue instead.

6. Write a code to generate deadlock?

More resources you share between threads and the more locks you take, the greater your risk of running into a dead lock situation

7. How will you solve deadlock in iOS for above question?

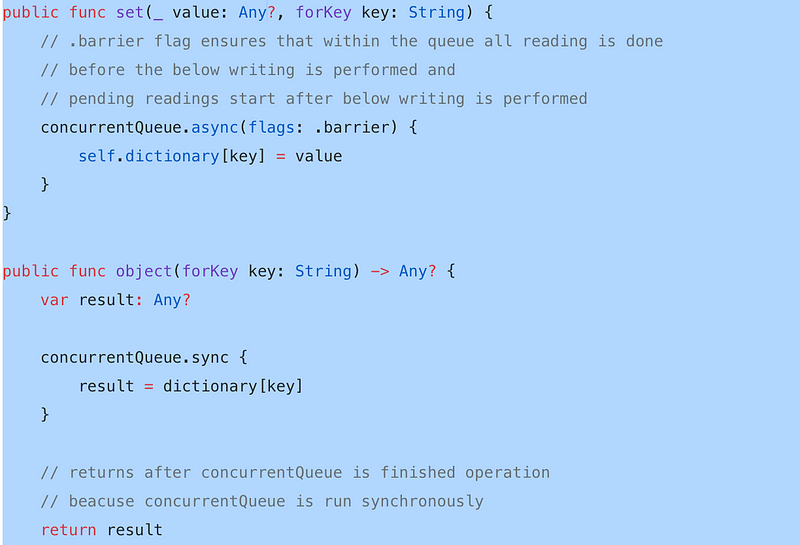

8. How will you solve Starvation/Readers-Writers problem in iOS for above question?

Locking shared resources can result in the readers-writers problem. In many cases, it would be wasteful to restrict reading access to a resource to one access at a time. Therefore, taking a reading lock is allowed as long as there is no writing lock on the resource. In this situation, a thread that is waiting to acquire a write lock can be starved by more read locks occurring in the meantime.

Using concurrent queues with barriers helps us improve and speed up our code while eliminating the readers-writers problem

9. What is Priority Inversion?

Priority inversion describes a condition where a lower priority task blocks a higher priority task from executing, effectively inverting task priorities. Since GCD exposes background queues with different priorities, including one which even is I/O throttled, it’s good to know about this possibility.

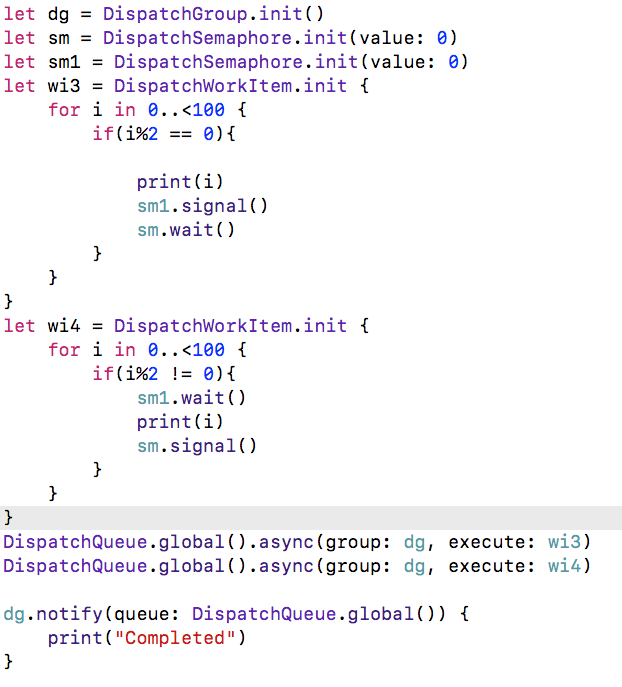

10. Write a code to print number in sequence while two threads are responsible for printing even and odd numbers?

Note: You can use the semaphore in 2 different ways:

-

To say when work or a resource is ready. In this case you

start the semaphore at 0. The creator calls

signalwhen something is ready. The consumer callswaitto wait for the expected item / resource. -

To limit the number of concurrent operations / requests /

usages. In this case you start the semaphore at a positive

value, like 4. The users each call

waitand if resource is available they are allowed to continue. If not they are blocked. When each has finished with the resource they callsignal.

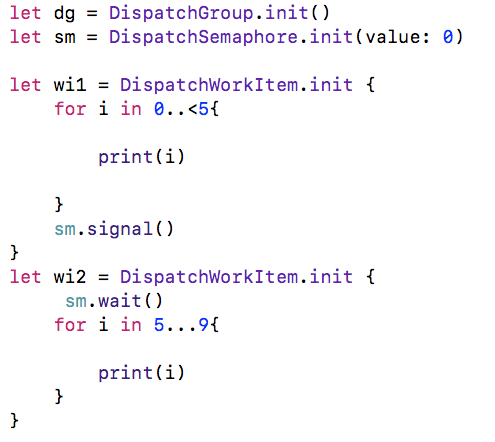

11. Write a code for adding dependency between two concurrent threads using semaphore?

Terminology:

- Race Condition A race condition occurs when two or more threads can access shared data and they try to change it at the same time.

- Deadlock A deadlock occurs when two or sometimes more tasks wait for the other to finish, and neither ever does.

- Readers-Writers problem Multiple threads reading at the same time while there should be only one thread writing.

- Readers-writer lock Such a lock allows concurrent read-only access to the shared resource while write operations require exclusive access.

- Dispatch Barrier Block Dispatch barrier blocks create a serial-style bottleneck when working with concurrent queues.

References: